Research Projects

Fairness in Social Network Analysis: Structural Bias & Algorithms

This project investigates how structural biases embedded in social networks influence algorithmic outcomes and fairness in AI-driven decision-making. We study how network topology, homophily, and community structure amplify inequality, causing underrepresentation of minority or marginalized groups. The project aims to develop fairness-aware algorithms for centrality, link prediction, and community detection that address these imbalances. Our broader goal is to establish theoretical and computational foundations for equitable network analysis, ensuring that social network algorithms recognize and mitigate bias rather than reinforce it.

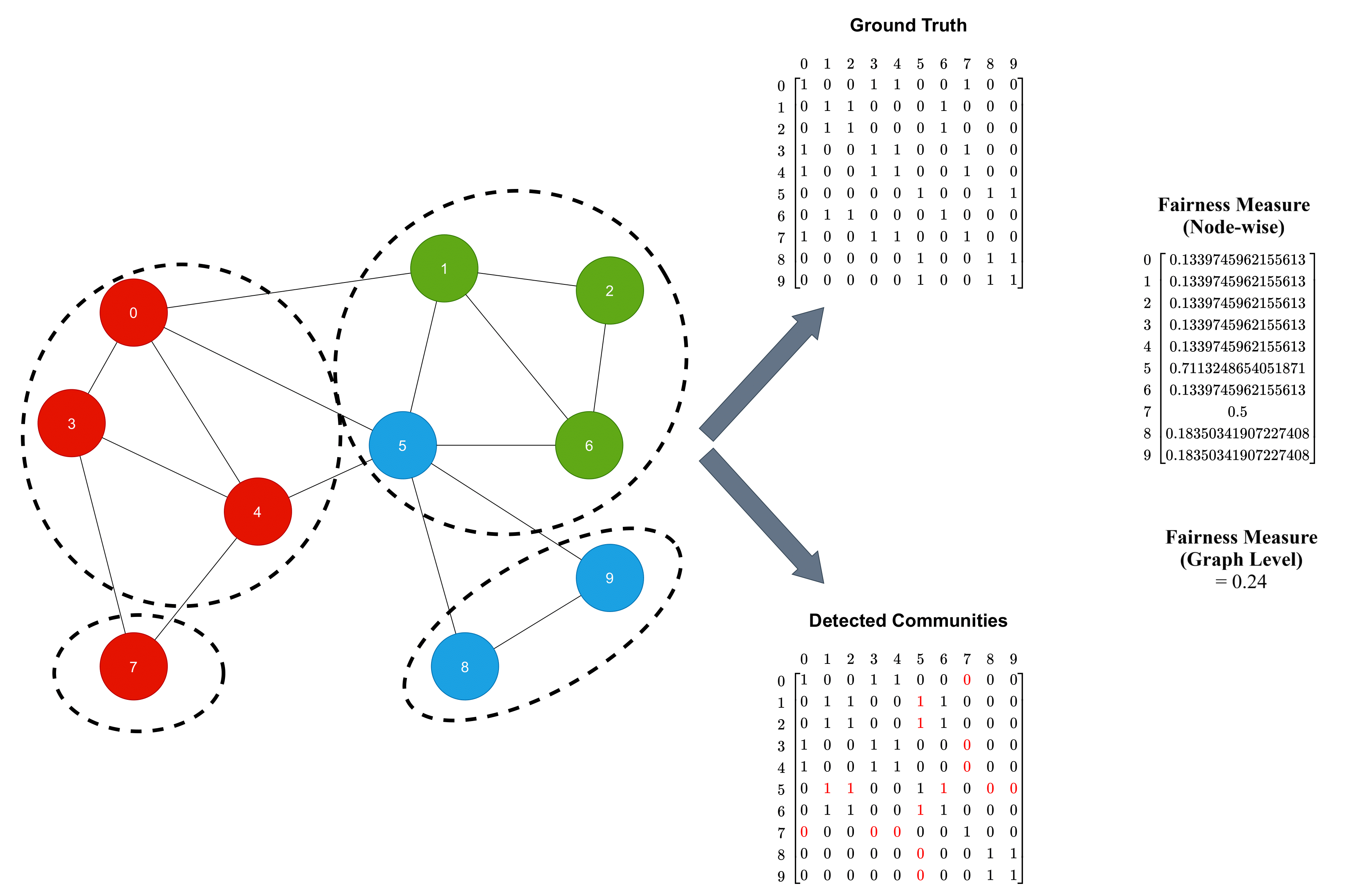

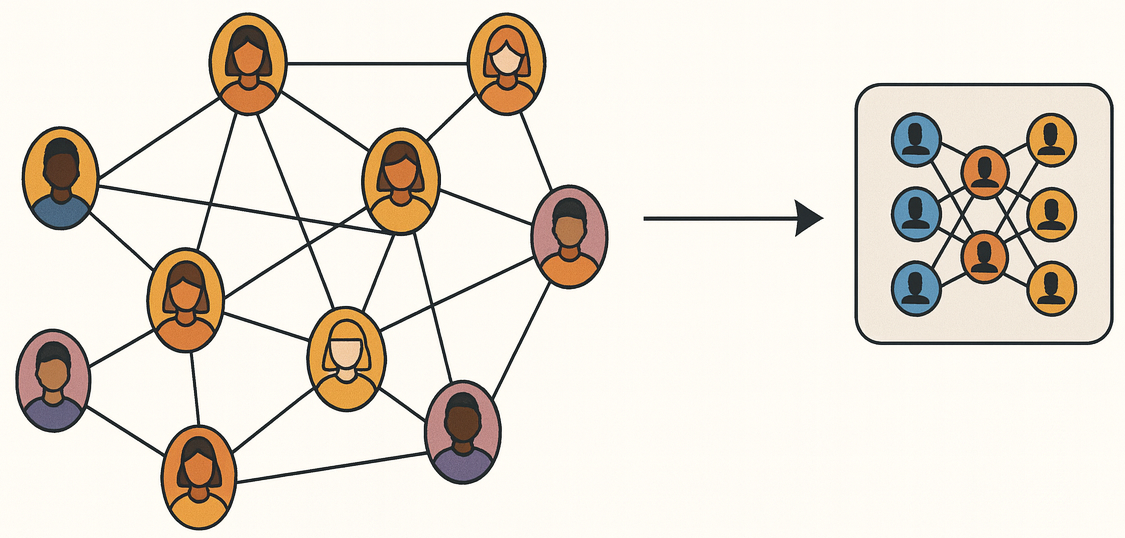

Fairness Metrics for Community Detection

The Fairness Metrics for Community Detection project focuses on developing principled measures to evaluate and ensure fairness in how communities are identified within complex networks. Traditional community detection algorithms often produce biased outcomes, overrepresenting or marginalizing certain groups, due to structural or demographic imbalances in the data. To address this, we have proposed novel group and individual fairness metricsthat quantify disparities both across communities and at the node level. Building on this foundation, our goal is to extend these metrics to overlapping, multilayer, and hypergraph networks, capturing fairness in richer and more realistic network representations. This project aims to establish a standardized framework for assessing and mitigating algorithmic bias in community detection, paving the way toward equitable analysis of human-driven networks.

Fairness-aware Fake News Mitigation & Influence Blocking

This project investigates how misinformation spreads through social networks and how intervention strategies can be made both effective and fair. Traditional influence-blocking algorithms focus solely on maximizing coverage, removing or limiting the spread of fake news, but often ignore the disproportionate suppression or amplification of specific demographic groups. This project aims to develop fairness-aware influence-blocking models that minimize misinformation spread while preserving equal representation in communication opportunities.

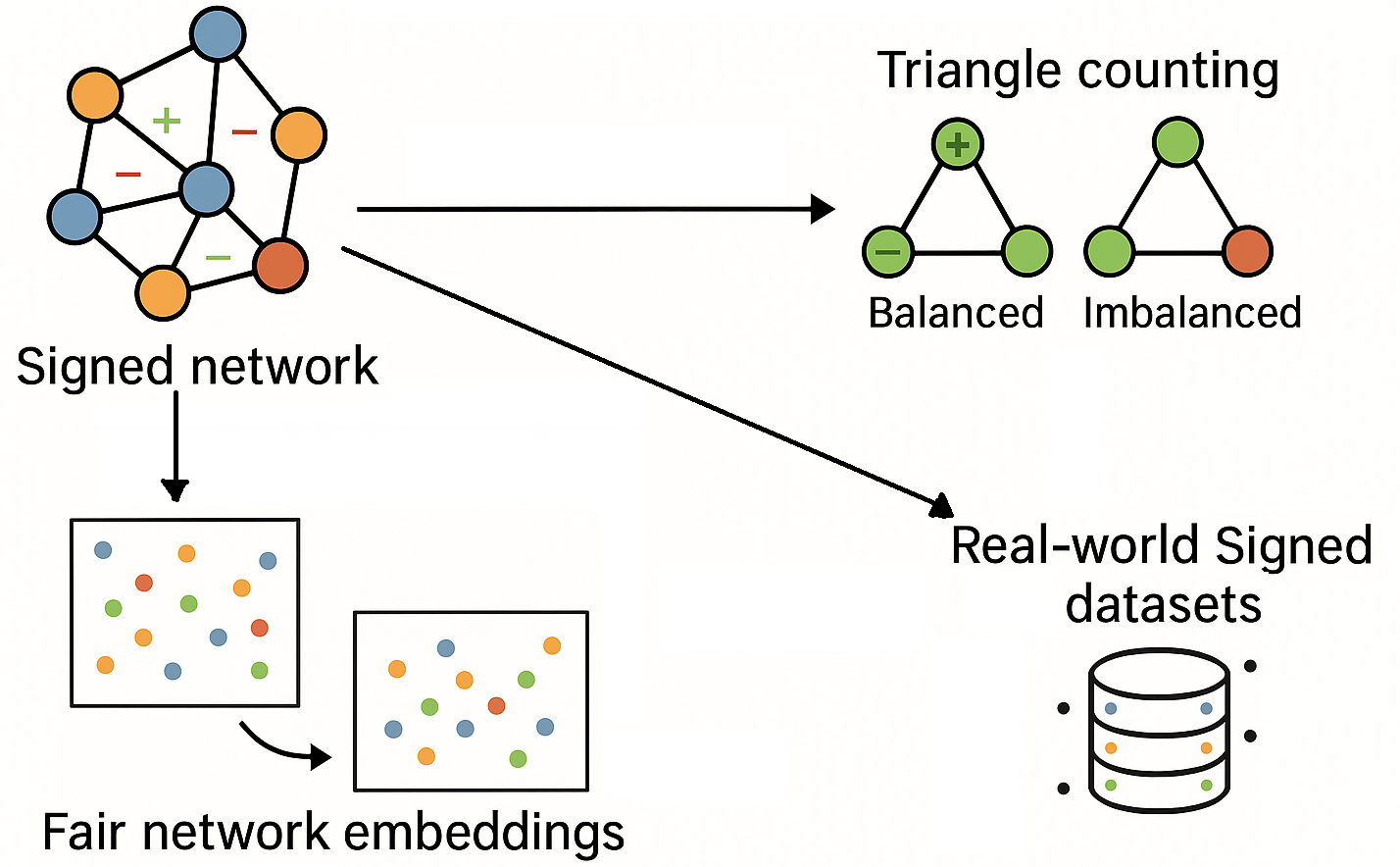

Algorithmic Fairness in Signed Networks

Signed networks—where relationships can be positive (trust, friendship) or negative (conflict, distrust)— offer a richer and more realistic view of social and information systems. However, existing AI models often overlook the structural and fairness implications of negative ties. This project investigates how biases emerge and propagate in signed networks and develops methods to mitigate them. We propose efficient algorithms to count and classify all types of signed triangles, providing a foundation for measuring structural balance and identifying unfair interaction patterns. Building on this, we design fair network embedding techniques that preserve both positive and negative relationships while ensuring equitable representation of diverse node groups. The project also involves collecting and curating real-world signed network datasets, enabling the development and benchmarking of fairness-aware algorithms for signed networks.

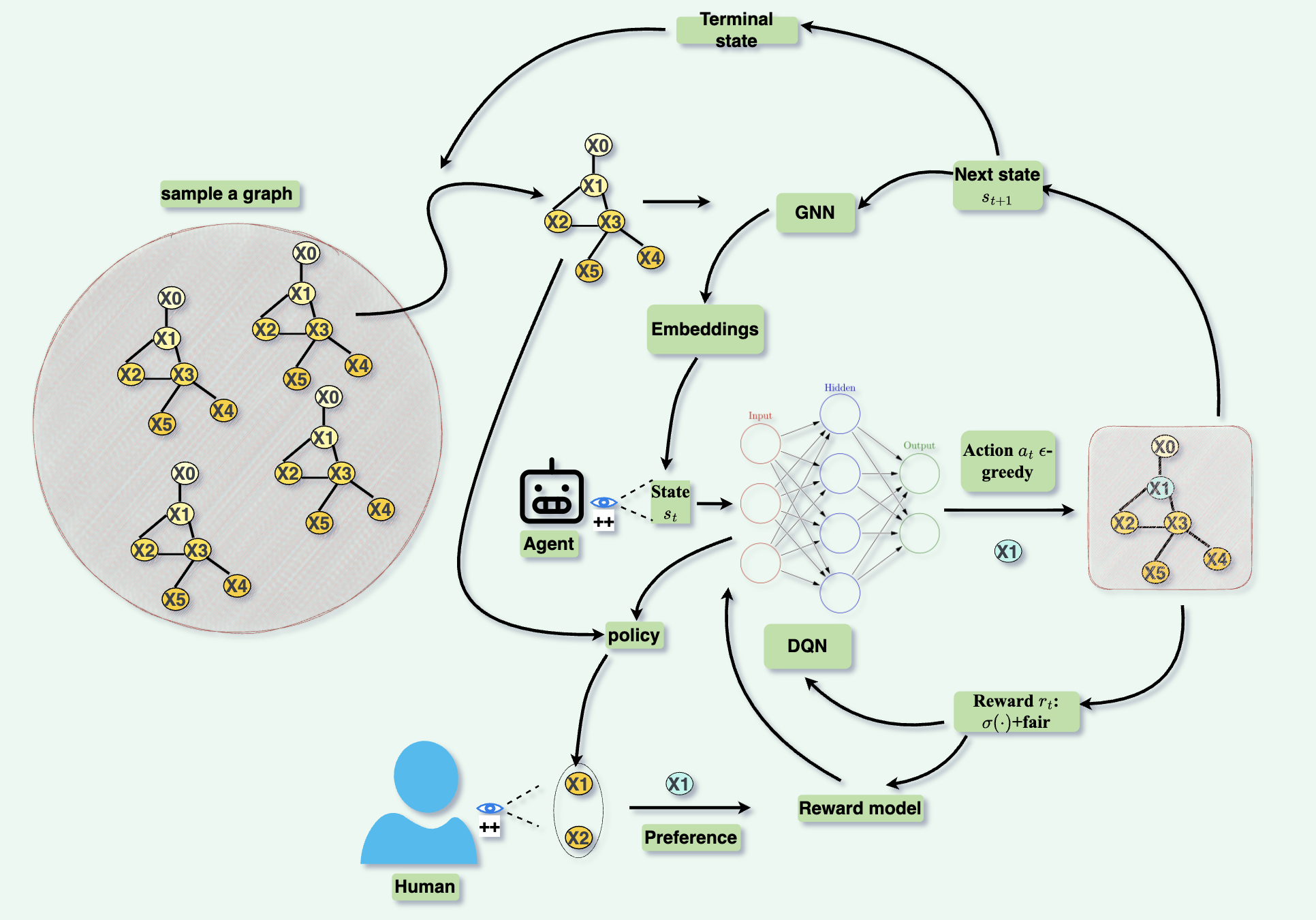

Deep Reinforcement Learning for Fair Network Analysis

The Deep Reinforcement Learning for Fair Network Analysis project investigates how reinforcement learning (RL) can be leveraged to promote fairness in complex social network algorithms. The research explores Deep Q-Networks (DQNs) combined with Graph Neural Networks (GNNs) to model network dynamics as a Markov Decision Process (MDP), enabling adaptive decision-making that avoids the disproportionate exclusion of minority groups. By learning policies that generalize across problem instances, RL offers a scalable and efficient framework for addressing fairness challenges in network analysis. The project further incorporates human feedback (RLHF) to refine reward models and extend the approach to fair rumor blocking and user incentivization. This project further explores policy gradient methods such as Actor–Critic (AC) to design robust, fairness-aware strategies for real-world social network scenarios.

Fairness in Graph Neural Networks: Imbalanced Classes & Heterophily

The Fairness in Graph Neural Networks: Imbalanced Classes & Heterophily project focuses on uncovering and mitigating bias in graph-based deep learning models. Graph Neural Networks (GNNs) have become powerful tools for analyzing relational data, yet their performance and fairness often deteriorate when networks exhibit class imbalance or heterophily—situations where connected nodes belong to different classes. These structural challenges can lead to unfair predictions, disproportionately affecting underrepresented groups or minority classes. This project develops fairness-aware GNN architectures and training strategies that ensure equitable learning under such complex network conditions. By integrating bias correction, reweighting mechanisms, and fairness constraints into the GNN learning pipeline, we aim to improve both model robustness and ethical accountability.

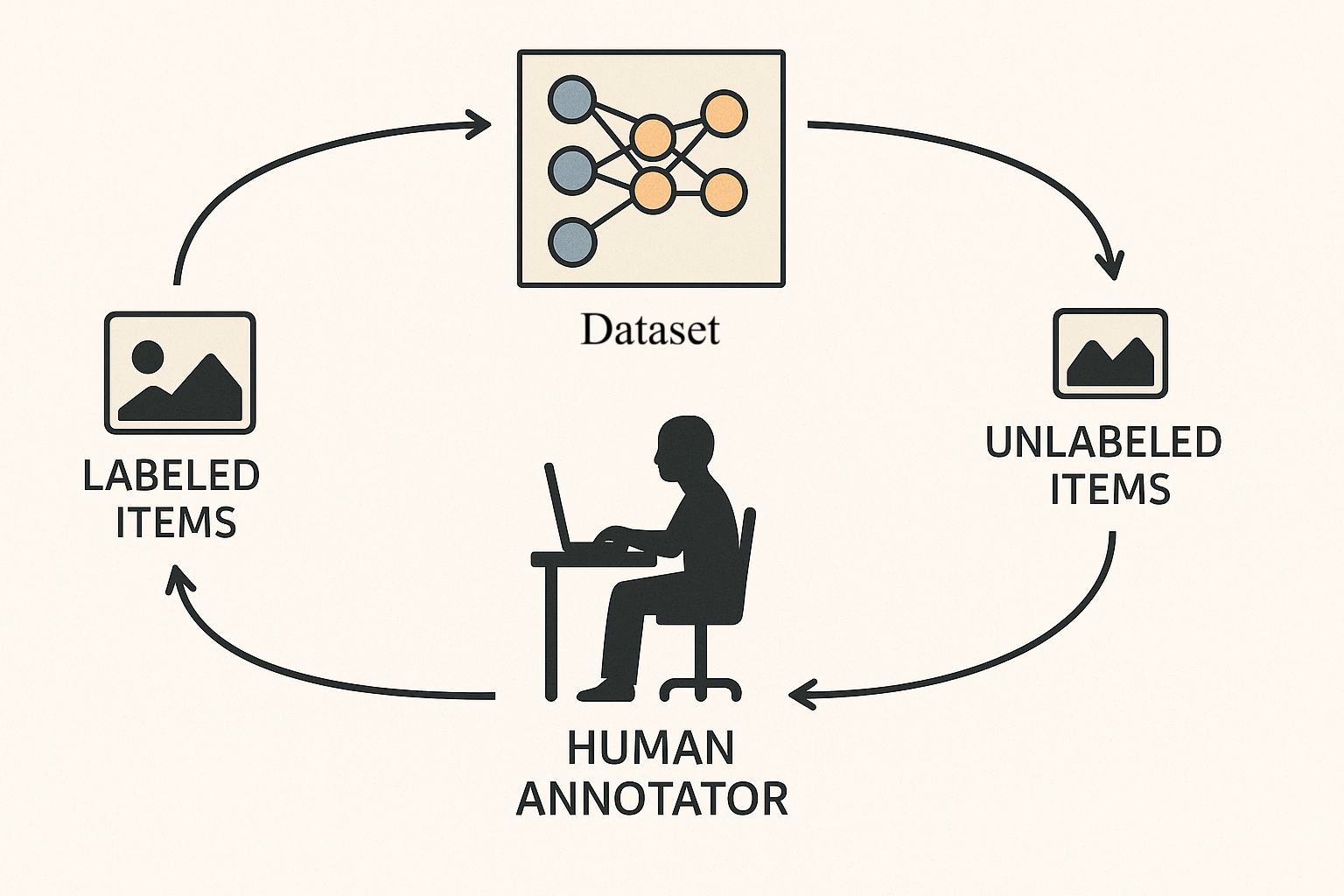

Fair Active Learning

The Fair Active Learning project focuses on designing machine learning models that can actively select data samples for labeling while maintaining fairness across demographic groups. Traditional active learning strategies tend to over-represent majority groups or easily classifiable samples, reinforcing existing biases in data-driven systems. This project aims to develop fairness-aware query selection mechanisms that balance informativeness and representativeness, ensuring equitable model improvement across all populations. By integrating fairness constraints directly into the active learning loop, we seek to create adaptive algorithms that minimize bias during model training—resulting in AI systems that are both data-efficient and socially responsible.

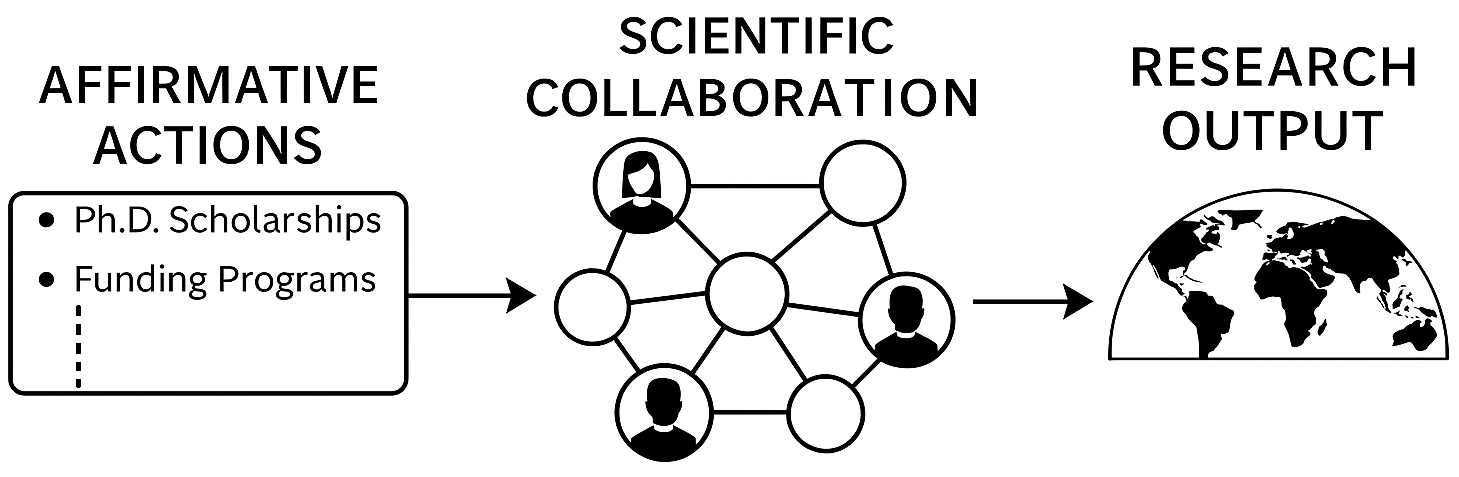

Modeling the Impact of Affirmative Actions in Scientific Collaboration

The Modeling the Impact of Affirmative Actions in Science project explores how national policies aimed at promoting inclusivity and research capacity—commonly known as affirmative actions—shape the global scientific landscape. While developing countries have historically lagged in scientific output due to limited funding and infrastructure, many have recently implemented targeted programs such as international Ph.D. scholarships and return incentives to reverse brain drain and stimulate innovation. Using network science techniques, this project models and compares the evolution of co-authorship and collaboration networks across countries with and without affirmative policies, analyzing how such initiatives affect research productivity, international partnerships, and long-term scientific growth. By quantifying these impacts, the project aims to uncover causal links between policy interventions and scientific advancement, offering data-driven insights to guide future policy design for equitable and sustainable global research development.

Algorithmic Profiling and Web Tracking: Privacy, Politics, and Data Governance

This project investigates how data collection and algorithmic profiling practices shape user privacy and regulatory landscapes across regions. While web tracking enables personalization and targeted advertising, it also fuels large-scale data extraction and deepens surveillance capitalism. This project examines how these dynamics unfold across political and regulatory contexts across different countries. By combining technical analyses of tracking mechanisms with policy and governance studies, we explore how varying institutional structures and political orientations influence privacy norms, accountability, and user rights. Ultimately, the project aims to produce empirical insights and actionable policy recommendations that advance equitable and transparent governance of data-driven digital ecosystems.